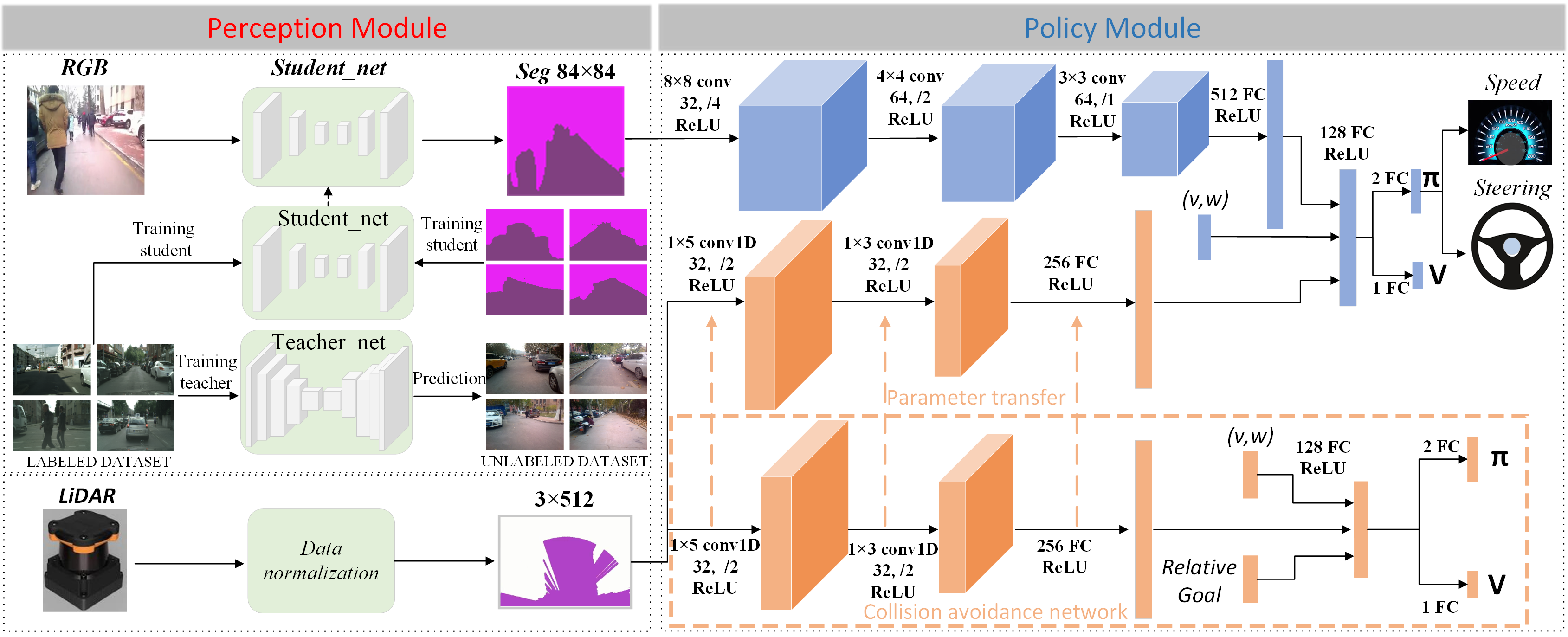

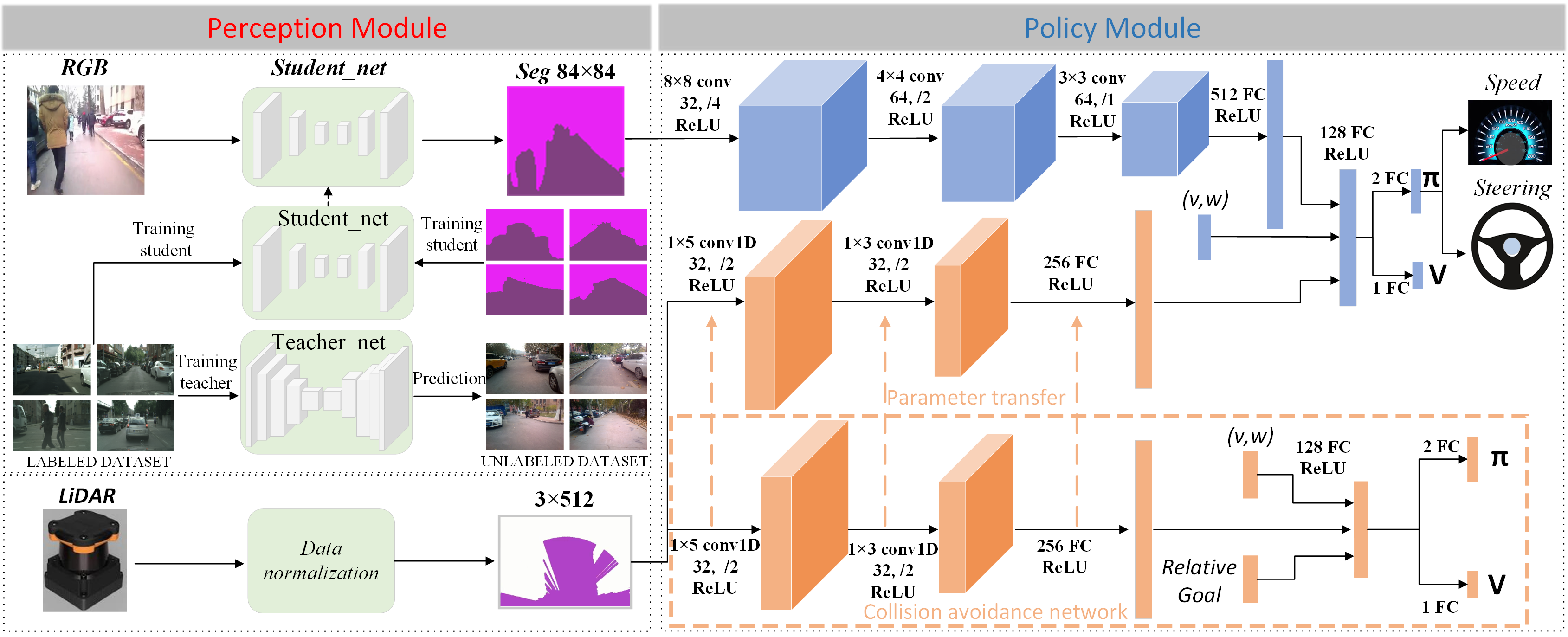

Overview of our multi-modal perception-based deep RL framework.

Abstract

In this paper, we present a novel navigation system of unmanned ground vehicle (UGV) for local path planning based on deep reinforcement learning. The navigation system decouples perception from control and takes advantage of multi-modal perception for a reliable online interaction with the surrounding environment of the UGV, which enables a direct policy learning for generating flexible actions to avoid collisions with obstacles in the navigation. By replacing the raw RGB images with their semantic segmentation maps as the input and applying a multi-modal fusion scheme, our system trained only in simulation can handle real-world scenes containing dynamic obstacles such as vehicles and pedestrians. We also introduce a modal separation learning to accelerate the training and further boost the performance. Extensive experiments demonstrate that our method closes the gap between simulated and real environments, exhibiting the superiority over state-of-the-art approaches.

● Data & Code: Coming soon!

● Video: