Grasp for Stacking via Deep Reinforcement Learning

1School of Control Science and Engineering, Shandong University 2Tencent AI Lab

Abstract

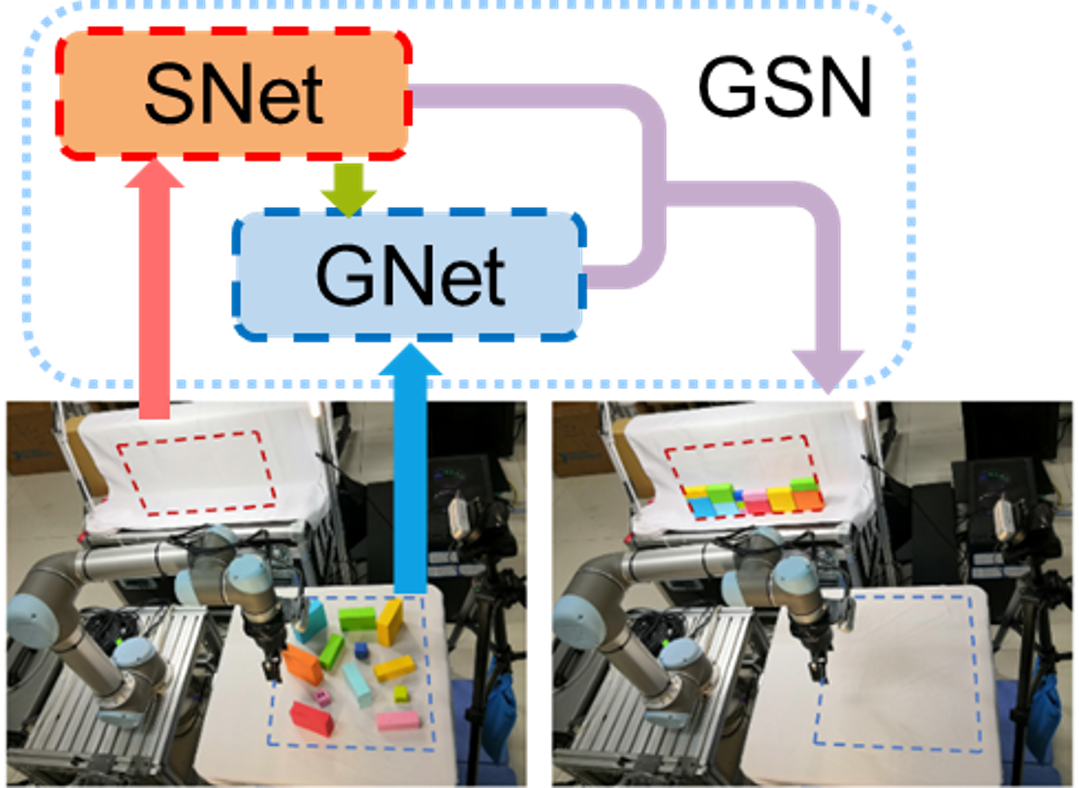

Integrated robotic arm system should contain both grasp and place actions. However, most grasping methods focus more on how to grasp objects, while ignoring the placement of the grasped objects, which limits their applications in various industrial environments. In this research, we propose a model-free deep Q-learning method to learn the grasping-stacking strategy end-to-end from scratch. Our method maps the images to the actions of the robotic arm through two deep networks: the grasping network (GNet) using the observation of the desk and the pile to infer the gripper's position and orientation for grasping, and the stacking network (SNet) using the observation of the platform to infer the optimal location when placing the grasped object. To make a long-range planning, the two observations are integrated in the grasping for stacking network (GSN). We evaluate the proposed GSN on a grasping-stacking task in both simulated and real-world scenarios.

Overview

We propose a system which jointly learns the grasping and the stacking policies. The grasping network (GNet) infer the gripper’s position and orientation for grasping. The stacking network (SNet) infer the optimal location when placing the grasped object. The observation of the desk and the pile are integrated in the grasping for stacking network (GSN).