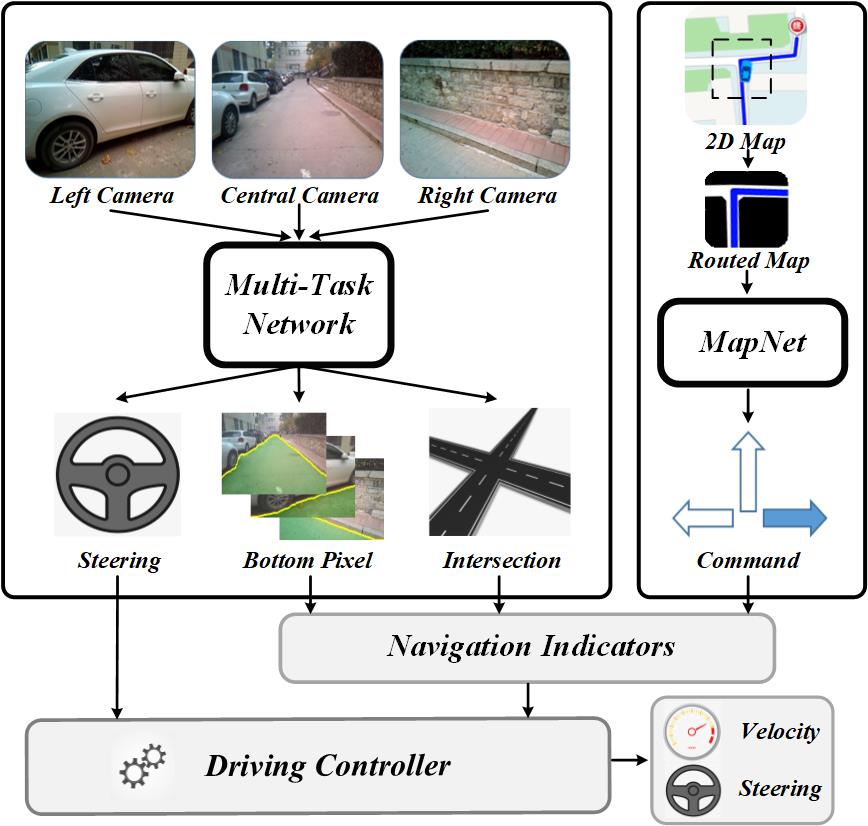

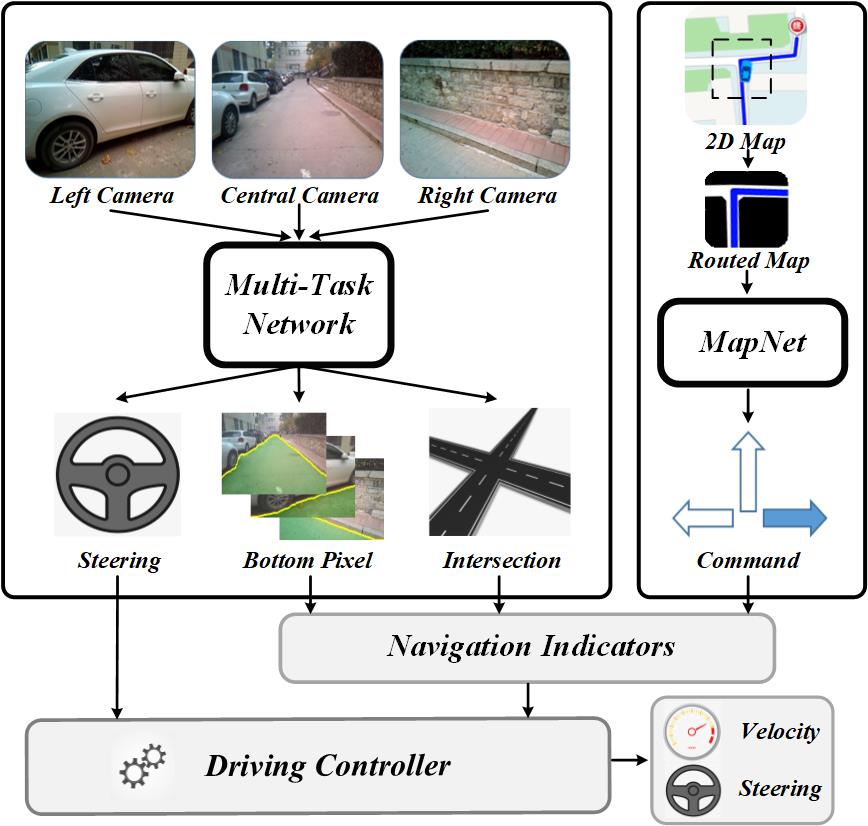

Overview of the proposed method.

Abstract

In this paper, we propose an autonomous method for robot navigation based on a multi-camera setup that takes advantage of a wide field of view. A new multi-task network is designed for handling the visual information supplied by the left, central and right cameras to find the passable area, detect the intersection and infer the steering. Based on the outputs of the network, three navigation indicators are generated and then combined with the high-level control commands extracted by the proposed MapNet, which are finally fed into the driving controller. The indicators are also used through the controller for adjusting the driving velocity, which assists the robot to adjust the speed for smoothly bypassing obstacles. Experiments in real-world environments demonstrate that our method performs well in both local obstacle avoidance and global goal-directed navigation tasks.

● Data & Code: Coming soon!

● Video: